Hey all! This update is coming in a little late, since I’ve been working through a bunch of interviews and projects for companies recently. After I get the definitive yes/no from those companies, I’ll see if I can post the projects here, but until then, here’s this week’s update.

This week, I learned in depth how to combat overfitting and faulty initialization, preprocess data, and a few state-of-the-art learning rate and gradient descent rules (including AdaGrad, RMSProp, and Adam). I also read some original ML research, and got started on doing my ML “Hello World”: the MNIST problem.

The section on overfitting was complete and explained the subject well, but the bit on initialization left me questioning a few things. For example, why do we use a sigmoid activation function if a lot of the possible values it can take (near 1, 0, and 0.5) are practically linear? Well, the answer, from the cutting-edge research, seems to be “we shouldn’t”. Xavier Glorot’s paper, Understanding the difficulty of training deep feedforward neural networks, explored a number of activation functions, and found that the sigmoid was one of the least useful, compared to the hyperbolic tangent and the softsign. To quote the paper, “We find that the logistic sigmoid activation is unsuited for deep networks with random initialization because of its mean value, which can drive especially the top hidden layer into saturation. […] We find that a new non-linearity that saturates less can often be beneficial.”

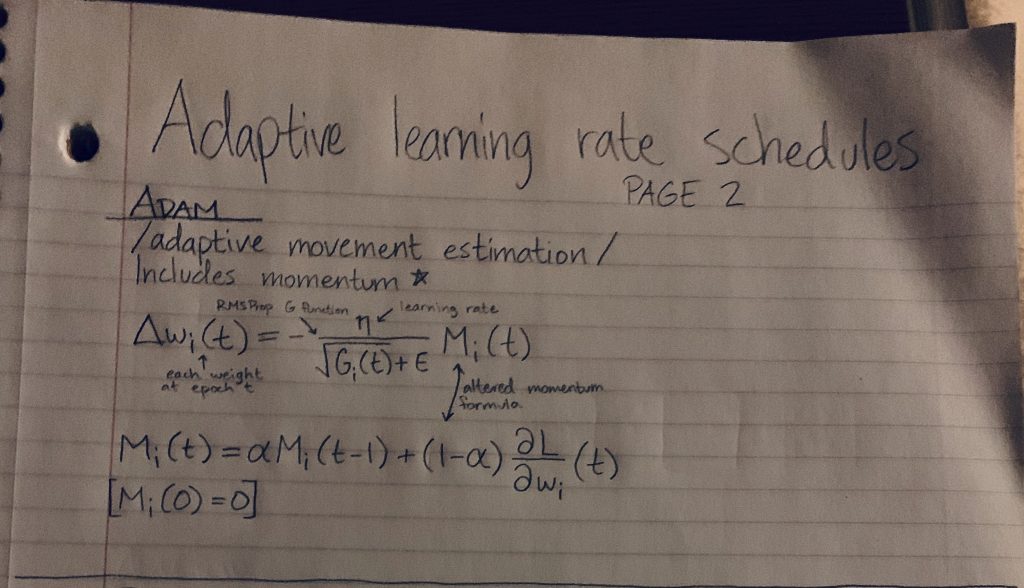

Within the course I’m using, section 41 deals with the state-of-the-art gradient descent rules. It’s exceedingly math-heavy, and took me a while to get through and understand. I found it helpful to copy down on paper all the relevant formulas, label the variables, and explain in short words what the different rules were for. Here’s part of a page of my notes.

I did teach myself enough calculus to understand the concepts of instantaneous rate of motion and partial derivative, which is all I’ve needed so far for ML. Here was the PDF I learned from, and which I will return to if I need to learn more.

The sections on preprocessing weren’t difficult to understand, but they did gloss over a decent amount of the detailed process, and I anticipate having a few minor difficulties when I start actually trying to preprocess real data. The part I don’t anticipate having any trouble with is deciding when to use binary versus one-hot encoding: they explain that bit relatively well. (Binary encoding involves sequential ordering of the categories, then converting those categories to binary and storing the 1 or 0 in an individual variable. One-hot encoding involves giving each individual item a 1 in a specific spot along a long list of length corresponding to the number of categories. You’d use binary encoding for large numbers of categories, but one-hot encoding for smaller numbers.)

The last thing I did was get started with MNIST. For anyone who hasn’t heard of it before, the MNIST data set is a large, preprocessed set of handwritten digits which can be categorically sorted by an ML algorithm into ten categories (the digits 0-9). I don’t have a lot to say about my process for doing this circa this week, but I’ll have an in-depth update on it next week when I finish it.